In This Story

Character animation has struggled with a fundamental trade-off: creating motions that are both physically realistic and precisely controllable. This challenge isn’t limited to video game development or animation – it impacts robotic simulation as well. Researchers at the RAI Institute have bridged the gap between kinematic motion diffusion models and physics-based control policies with a new guided diffusion framework called Diffuse-CLoC.

Challenges with Realistic Movement and Steerability

When you want to build a character with realistic movement and steerability, there are typically two different approaches to take. The first option is to use kinematic motion generation with diffusion models and the second option is to use physics-based control policies. However, both can pose challenges to creating realistic but steerable character movement.

Existing kinematic motion generation with diffusion models offers intuitive steering capabilities with inference-time conditioning; however, they produce physically invalid motions like floating above the ground, feet that slide, or movements that are not physically possible.

In contrast, physics-based control policies, as the name implies, generate motions that strictly obey the laws of physics. These policies learn to control the agent’s actions by interacting with a simulated environment and receiving feedback on their performance. This type of control policy is often used for reinforcement learning (RL). However, unlike kinematic-based diffusion models, these approaches require retraining to adapt to new scenarios, as inference-time conditioning isn’t practical. Having no direct way to compare goals set in the state space with the actions that are created is a serious challenge. Additionally, common methods like inpainting, which is used to smooth and disseminate information from known regions into the missing areas to fill in any gaps, can’t be applied here.

Both of these methods have pros and cons but Diffuse-CLoC combines the advantages of both while mitigating many of the challenges associated with steerability and realistic movement.

A Novel Solution: Joint State-Action Diffusion

A diffusion framework is a modern deep learning technique and generative model frequently used to create realistic robot motions and behaviors. Diffusion models gradually degrade data quality and then either reconstruct it or transform it into something new. This technique enhances the fidelity of generated data. Diffuse-CLoC builds on this concept by taking pure noise and reconstructing or generating clean, deployable motions or control signals, giving the model the ability to generate diverse outputs.

The aim of Diffuse-CLoC is to train an end-to-end state-action diffusion model that can handle a variety of unseen, long-horizon downstream tasks for a physics-based character without needing retraining. It models the joint distribution of both states and actions within a single diffusion model, making action generation steerable by conditioning it on predicted future states.

In the simplest terms, instead of separately planning where a character should move and then figuring out how to control it to achieve that movement, Diffuse-CLoC simultaneously considers both the destination and the means of getting there. This approach allows the system to leverage established techniques from kinematic motion generation while ensuring the resulting motions are physically plausible.

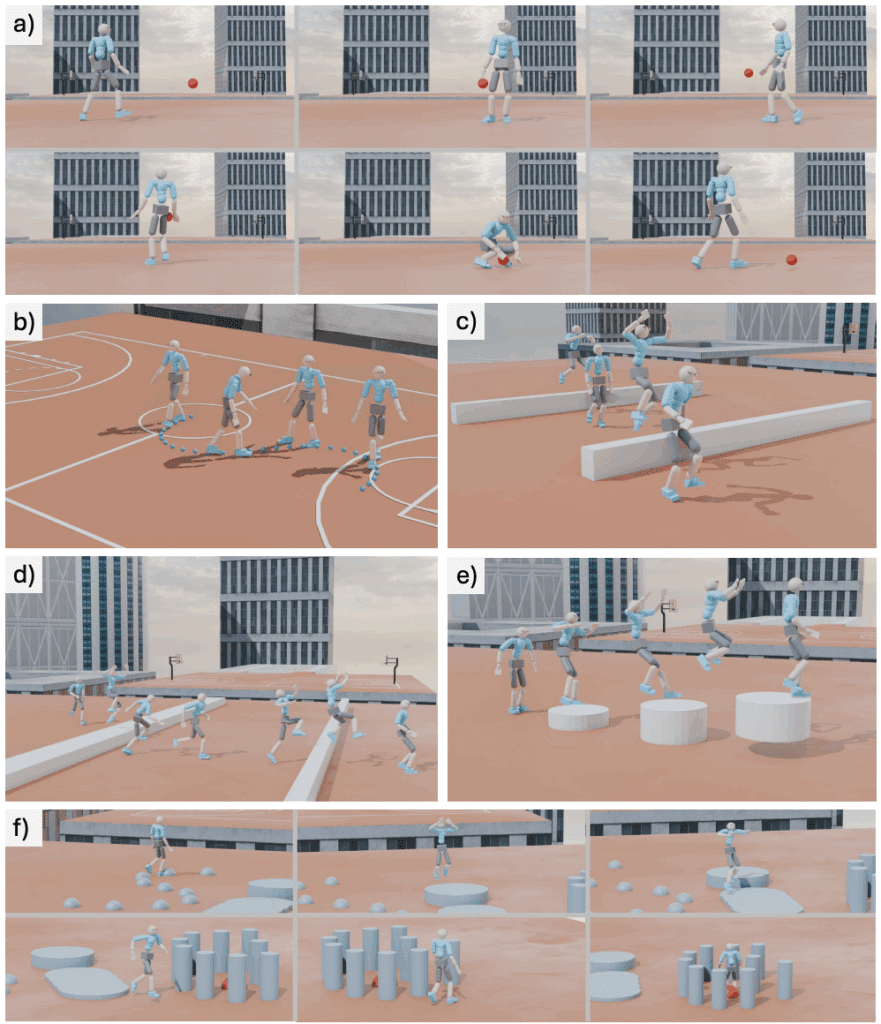

Diffuse-CLoC can be used to train models for such character actions as smoothly navigating around or avoiding obstacles, plausible transitions between keyframe poses (motion in betweening), jumping between pillars that have increasing heights, and easily guiding the character between different locations.

How Diffuse-CLoC Works

Diffuse-CLoCis based on de-noising diffusion models, which are good at predicting how things change over time. For each step in time, it estimates a sequence of actions and the resulting body positions for several steps into the future. It also considers the character’s past movements.

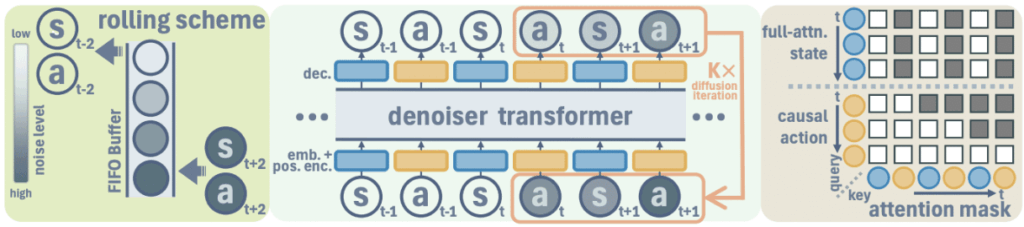

Similar to how a conveyor belt moves, with the rolling scheme, there are always a certain number of items. At each moment, a new noisy state and an action are added to the belt, and the oldest (clean) information is removed and used to make the simulation move forward.

The denoiser at the center acts like a filter that cleans up the information (states and actions) on the conveyor belt. The further along the belt the information is, the more noise it removes. If there’s an actual observation like a direct measurement, it’s put right into the sequence as it is. Only future predictions that are still noisy get cleaned up. It’s important to note that the noise in states and actions can be different.

Finally, the mask options on the right control what information each part of the system pays attention to. In diffusion policies, masking is used to obscure or remove certain parts of the input data during the training or inference process. This allows the model to focus on specific aspects of the task and/or to improve its ability to generalize. Training with masked data also helps the model handle variations in the environment or task.

Training and guiding diffusion-based policy

This prediction system is trained by showing examples of clean movements and then serving up noisy versions of those movements so it can learn to remove the noise and return to the clean movement. This training process is self-supervised, meaning it learns on its own without explicit labels.

While these models are usually trained to generate movements freely, our researchers found a way to guide them to produce specific movements. If you have a cost associated with certain movements, this system can use that cost to steer the character in the desired direction. This classifier guidance or inpainting works well for defining the character’s body positions, but can be challenging to apply to the actual actions the character takes.

However, this new approach allows you to co-diffuse or simultaneously process both the character’s body positions and their actions. This co-diffusion process means that this model can not only generate realistic motions but can also act as a high-level controller, deciding what actions the character should take.

New architecture

A novel architecture and attention setup have been created to model the joint distribution of states and actions, allowing conditional action generation via steerable motion synthesis. A unique internal structure for the system has also been designed, using transformer-based diffusion architecture and an attention mechanism that includes non-causal attention for states and causal attention for actions. This structure allows the system to look ahead at future desired body positions and use that information to decide on the current actions, thus making the character’s movements more robust and steerable as well as helping it accomplish complex tasks over a long period.

Optimized rolling interference scheme

An optimized rolling inference scheme has also been introduced for autoregressive action generation, enabling interactive execution of agile motions, significantly improving consistency and speed. Traditional replanning approaches can cause characters to oscillate between different plans, creating jerky or inconsistent behavior. Diffuse-CLoC addresses this by maintaining a buffer where noise levels are assigned based on temporal proximity. Immediate actions receive little noise, which makes them more certain, while distant future predictions receive more noise to help maintain flexibility.

Results

In benchmark comparisons, Diffuse-CLoC significantly outperforms traditional hierarchical approaches that separate kinematic planning from physics-based tracking:

- In obstacle jumping tasks, the traditional approach achieved only 22% success rate, while Diffuse-CLoC achieved 71% success.

- The system also maintains better motion quality, with a reduction in a number of artifacts like foot sliding or floating.

Robotic Applications and Beyond

Diffuse-CLoC represents a significant step toward more flexible, generalizable character control systems. The ability to handle diverse tasks with a single pre-trained model could dramatically reduce development time and computational requirements.

While the current results are primarily in simulation, the long-term goal is to test these techniques on hardware. A major objective is to distill multiple specialized policies – such as walking, climbing stairs, or manipulating objects – into one generalized policy that can perform all these actions.

Beyond robotics, Diffuse-CLoC could open new possibilities in gaming and animation as well. For example, this approach could be applied to character development, leading to characters that are more responsive and showcase more realistic behaviors on screen. It bridges the gap between controllable motion generation and physical realism – you don’t have to sacrifice steerability and control for realistic motion and movement.

Overall, the creation of Diffuse-CLoC brings the RAI Institute closer to the overarching goal of developing generalist policies that enable humanoid robots to perform diverse task categories such as dynamic locomotion and contact-rich manipulation in a natural-looking and robust way.

Diffuse-CLoC: Guided Diffusion for Physics-based Character Look-ahead Control is authored by Xiaoyu Huang, Takara Truong, Yunbo Zhang, Fangzhou Yu, Jean Pierre Sleiman, Jessica Hodgins, Koushil Sreenath, and Farbod Farshidian.