Abstract

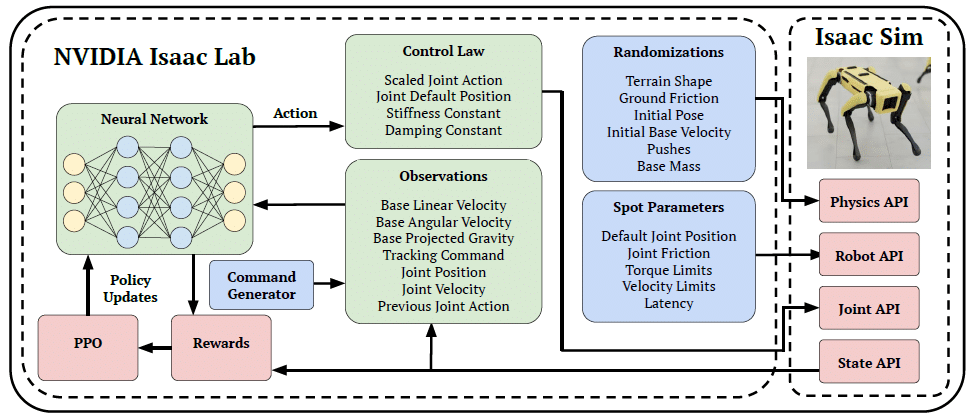

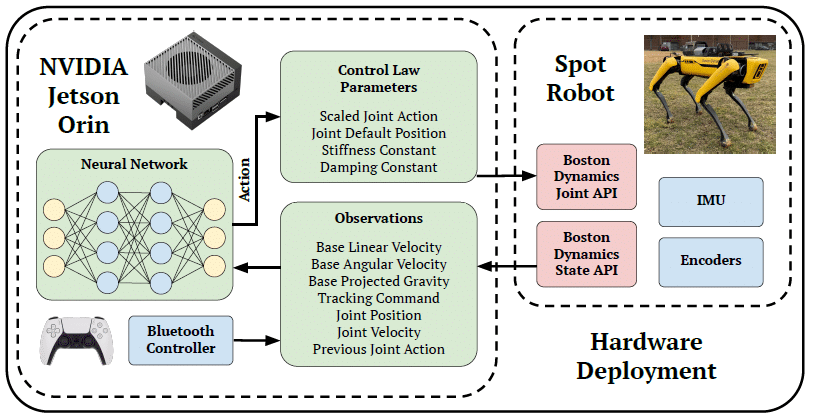

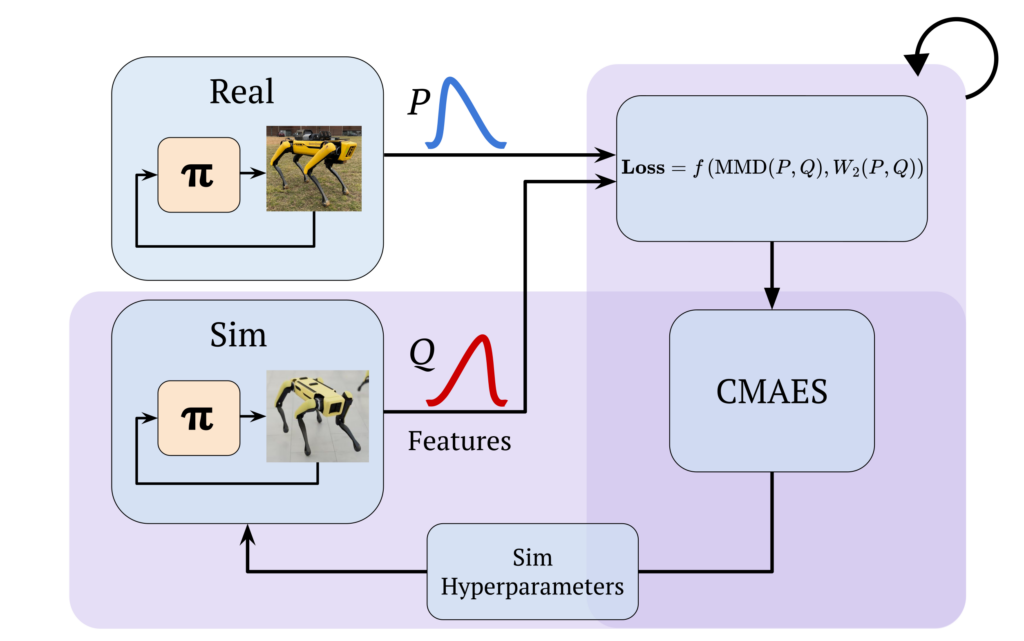

This work presents an overview of the technical details behind a high-performance reinforcement learning policy deployment with the Spot RL Researcher Development Kit for low-level motor access on Boston Dynamics’ Spot. This represents the first public demonstration of an end-to-end reinforcement learning policy deployed on Spot hardware with training code publicly available through NVIDIA Isaac Lab and deployment code available through Boston Dynamics. We utilize Wasserstein Distance and Maximum Mean Discrepancy to quantify the distributional dissimilarity of data collected on hardware and in simulation to measure our sim-to-real gap. We use these measures as a scoring function for the Covariance Matrix Adaptation Evolution Strategy to optimize simulated parameters that are unknown or difficult to measure from Spot. Our procedure for modeling and training produces high-quality reinforcement learning policies capable of multiple gaits, including a flight phase. we deploy policies capable of over 5.2m/s locomotion, more than triple Spot’s default controller maximum speed, robustness to slippery surfaces, disturbance rejection, and overall agility previously unseen on Spot. We detail our method and release our code to support future work on Spot with the low-level API.

Methods

Locomotion on Normal Ground

Locomotion on Slippery Ground

Push Recovery on Normal Ground

Push Recovery on Slippery Ground

BibTeX

@article{miller2025spotrl,

title={High-Performance Reinforcement Learning on Spot: Optimizing Simulation Parameters with Distributional Measures},

author={Miller, AJ and Yu, Fangzhou and Brauckmann, Michael and Farshidian, Farbod},

journal={arXiv preprint ...},

year={2025}

}