Abstract

We present a fully autonomous real-world RL framework for mobile manipulation that can learn policies without extensive instrumentation or human supervision. This is enabled by 1) task-relevant autonomy, which guides exploration towards object interactions and prevents stagnation near goal states, 2) efficient policy learning by leveraging basic task knowledge in behavior priors, and 3) formulating generic rewards that combine human-interpretable semantic information with low-level, fine-grained observations. We demonstrate that our approach allows Spot robots to continually improve their performance on a set of four challenging mobile manipulation tasks, obtaining an average success rate of 80% across tasks, a 3-4 times improvement over existing approaches.

Learning via Practice

We show the evolution of the robot’s behavior as it practices and learns skills. These are learned over 8-10 hours practice in the real world.

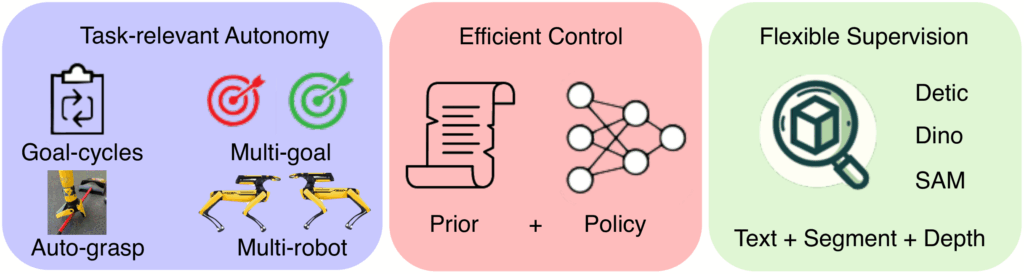

Approach Overview

Task-relevant Autonomy: Ensures data collected is likely to have learning signal.

Efficient Control: Uses signal to learn skills and collect better data.

Flexible Supervision: Determines how to define learning signal for tasks.

Task Relevant Autonomy: Auto-Grasp

The full action space for the robot (left) is very large, and it rarely interacts with objects. Our approach leads the robot to grasp objects (right), leading to data with better signal. Note that the robot isn’t yet performing the task of standing up the dustpan.

Task Relevant Autonomy: Goal-Cycles

Goal cycles ensure the robot can keep learning without stagnation.

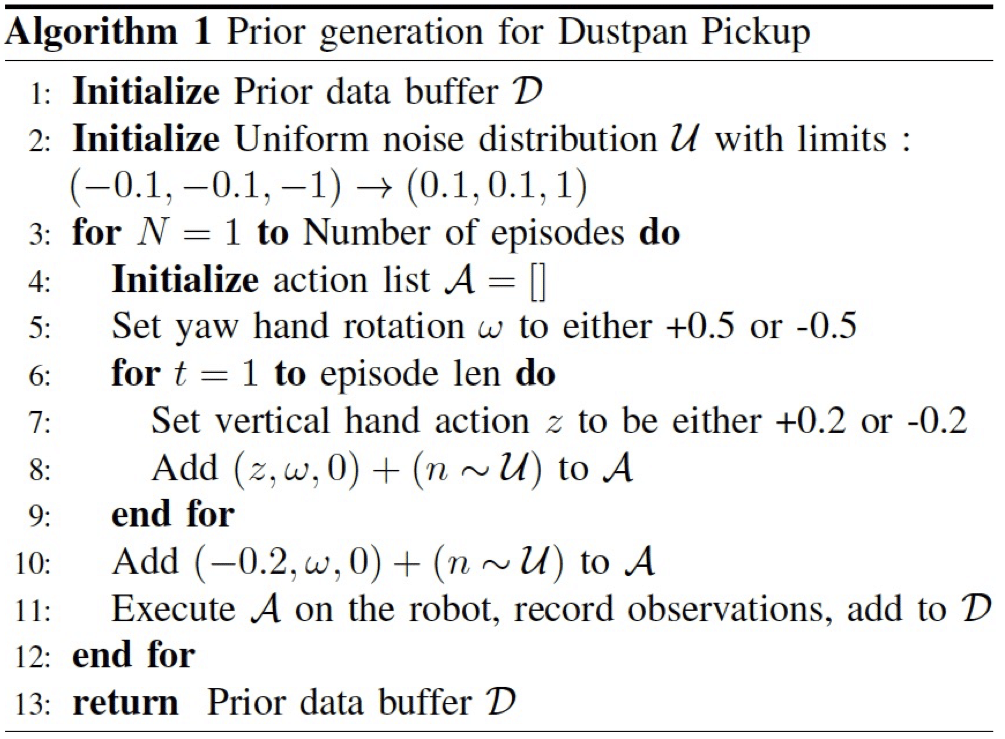

Efficient Control: What Priors Do We Use?

Leveraging priors helps collect data with better learning signal.

Planners (rrt*) with simplified models, for navigation (chair)

Movement restriction based on

distance to detected object (sweeping)

Simple scripted behavior (dustpan standup)

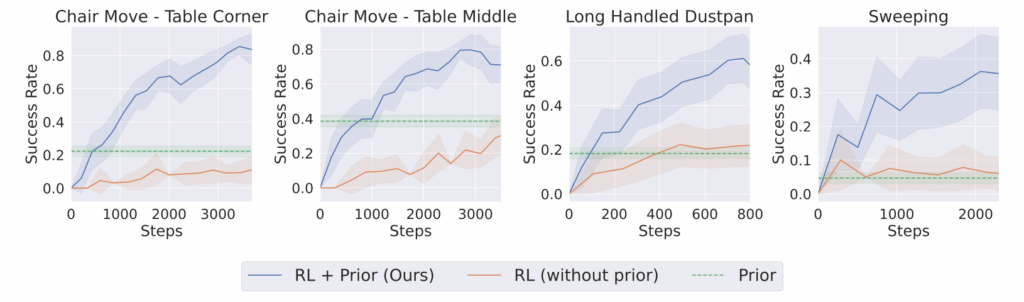

Efficient Control: RL + Priors

We combine RL with behavior priors to learn with very few samples. Priors while useful are not sufficient to reliably perform the task. RL without priors is very inefficient. Note all compared approaches shown use task-relevant autonomy.

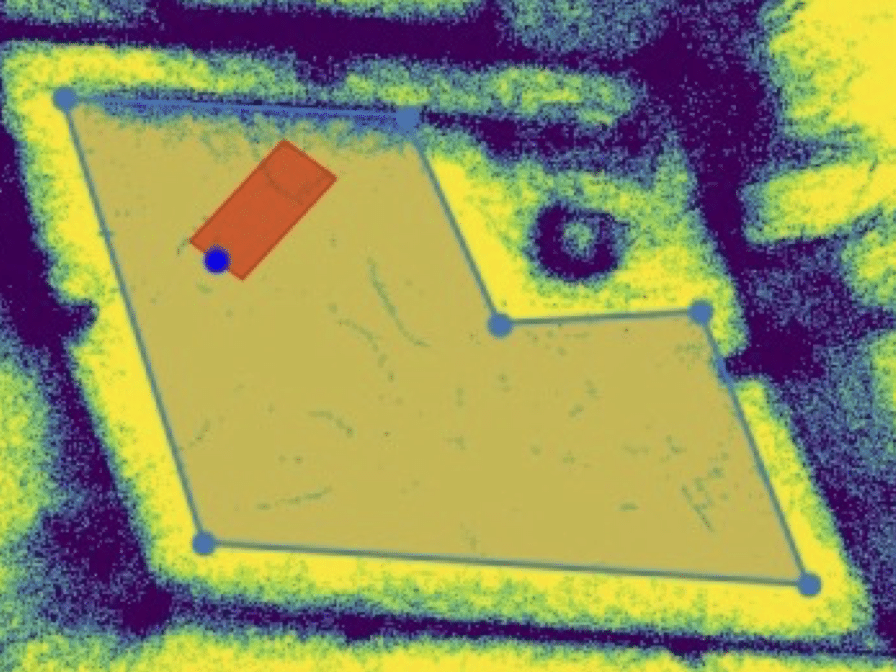

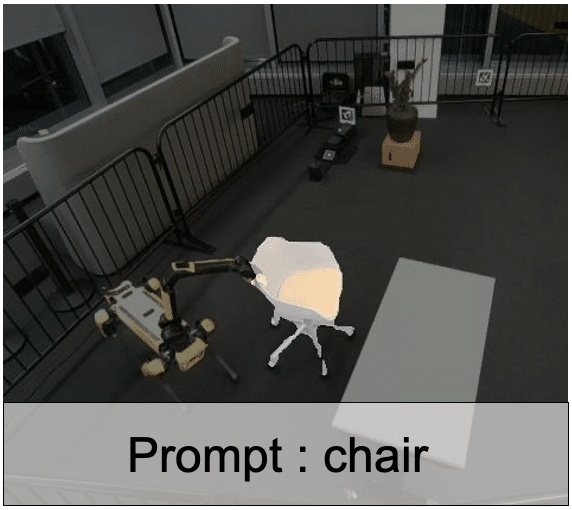

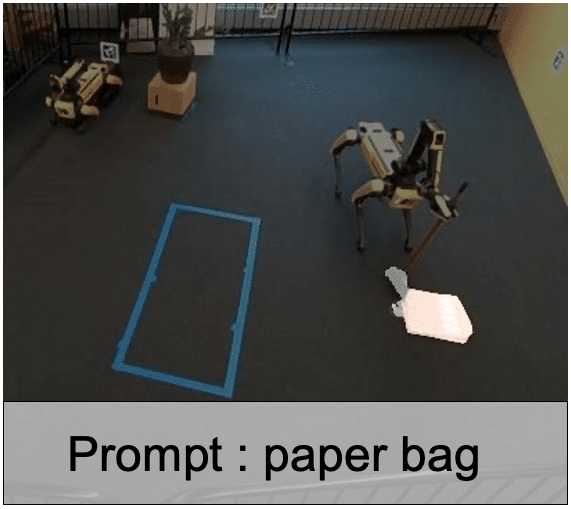

Reward Specification

Text prompts used to get object masks from Segment Anything for chair moving (left) and sweeping (right). These are combined with depth observations to obtain state estimates, used to define reward.

RL Discovery

With higher policy entropy, RL sometimes discovers new behaviors that complete the task, quite different from the prior.

BibTeX

@inproceedings{mendonca2024continuously,

title={Continuously Improving Mobile Manipulation with Autonomous Real-World RL},

author={Mendonca, Russell and Panov, Emmanuel and Bucher, Bernadette and Wang, Jiuguang and Pathak, Deepak},

journal={Conference on Robot Learning},

year={2024}

}