In This Story

For robots to perform complex tasks autonomously in the real world, they need to move and handle objects simultaneously while navigating unstructured environments. In robotics, this is called loco-manipulation. While this level of task coordination seems straightforward – humans often move and manipulate objects at the same time – current approaches to loco-manipulation often fall short. Most robots are used for specific purposes, which results in them being constrained by task-specific designs or fixed joint configurations. These limitations make their ability to adapt to diverse environments and scenarios challenging, to say the least.

However, a recent framework developed at the RAI Institute, ReLIC (Reinforcement Learning for Interlimb Coordination) has demonstrated significant advances in loco-manipulation. This work, produced by Xinghao Zhu, Yuxin Chen, Lingfeng Sun, Farzad Nirou, Simon Le Cleac’h, Jiuguang Wang, and Kuan Fang, proposes a new way to allow the robot to use any combination of limbs for manipulation, without predefinition.

“We call this concept flexible interlimb coordination, which breaks the traditional barriers of arms and legs, and allows us to change limb assignments dynamically during task execution as appropriate,” says co-lead author Yuxin Chen.

Co-lead authors Xinghao Zhu and Lingfeng Sun add, “What makes this framework powerful is that the robot can fluidly switch which limbs it uses for support or manipulation without needing those roles to be predefined. This opens the door to more natural and adaptable behavior when robots face complex loco-manipulation situations in the real world.”

Even though the framework was demonstrated on a quadruped robot, the framework is broadly applicable to other legged systems, such as humanoid robots. ReLIC is a flexible framework that can be applied across a range of robots, such as a humanoid carrying a bulky and heavy object through a narrow doorway. To keep balance such a robot might momentarily stand on one leg, bracing and guiding the object with the other leg and both arms. At that moment, arms and legs stop serving their traditional roles of manipulation versus locomotion. Instead, every limb becomes part of a flexible, coordinated effort – one arm pushing, another pulling, a foot stabilizing, and the remaining legs supporting the body’s weight. Such dynamic reassignment of roles for the limbs is the kind of interlimb coordination that robots have long struggled to achieve.

Challenges with Whole-Body Control

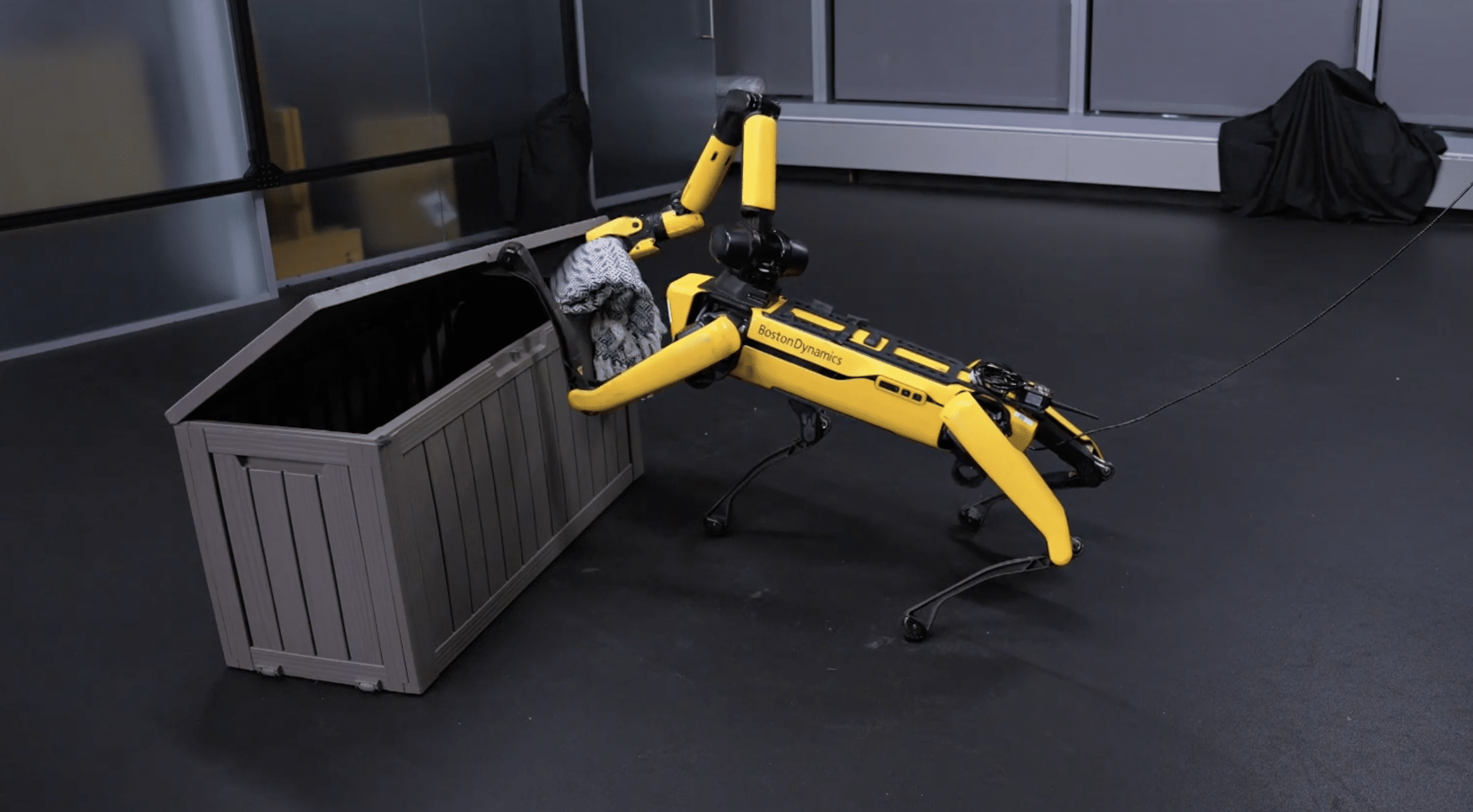

Whole-body loco-manipulation requires successfully completing the manipulation task while simultaneously maintaining balance during locomotion. Consider a quadruped robot with an arm moving a large box across a room. The arm and a leg can work together to grasp the box, essentially creating a large gripper, while the remaining legs provide locomotion. Traditional methods often struggle with such dynamic scenarios, either treating arms and legs separately or relying on fixed limb roles.

To complete these types of loco-manipulation tasks requires optimizing manipulation and locomotion simultaneously. However, this type of whole-body control complexity can present challenges due to high-dimensional action and state spaces created by the combinatorics of the possible positions, orientations, joint angles of the robot and location of the objects it interacts with. Complex contact dynamics further complicate control, as friction, force, and rotational effects all influence the motion that results. These effects are hard to accurately represent in mathematical models, making it more challenging to optimize the robot’s performance or behavior.

Versatile manipulation and limb role assignment enables robots to use their entire body – torso, legs and feet – rather than just hands. Sophisticated coordination is required to manage transitions like shifting from walking on four legs to supporting itself on three while one leg or arm performs a task. While determining optimal limb switching between locomotion and manipulation roles presents coordination challenges, broadening the ability to manipulate with several parts of the body expands the functional capacity of the robot.

ReLIC Architecture and Methodology

Instead of a monolithic, end-to-end policy, ReLIC decouples the loco-manipulation problem into two interconnected sub-problems, effectively bridging manipulation success with locomotion stability:

- Manipulation Module (Model-Based): This module prioritizes task success, utilizing a model-based controller (e.g., an inverse kinematics solver to calculate variable joint parameters) to achieve desired end-effector targets for designated manipulation limbs.

- Locomotion Module (Reinforcement Learning): For dynamic behavior requiring robustness and adaptability, we train a reinforcement learning (RL) policy. This policy robustly maintains stable gaits in accordance with the manipulation behavior.

The key to ReLIC’s success lies in the seamless communication between these two modules. They interact through the robot’s whole-body state and a binary mask that specifies whether a limb is currently assigned to manipulation or locomotion. This intelligent decoupling avoids rigid heuristics that typically cannot adjust to new or changing circumstances while preserving crucial inter-module coordination. Moreover, the decoupling reduces the action spaces to make the training of the controller more efficient.

Gait Regularization and Stability

To ensure stable locomotion across varying limb coordination patterns, ReLIC implements contact-time-based gait regularization during policy training, which uses a reward/penalty system to train a robot to achieve a desired walking rhythm and timing. The system enforces a trotting gait for quadrupedal locomotion with symmetric contact patterns between diagonal limb pairs and a three-phase bouncing gait for tripedal locomotion when one limb is designated for manipulation.

This approach provides better training stability than phase-based regularization methods by avoiding state-dependent phase variable sampling.

Sim-to-Real Transfer and Motor Calibration

Training in simulation has many benefits, from generating data for training complex policies to reducing hardware repairs from testing. However, one of the challenges is the sim-to-real gap, which becomes particularly pronounced when the robot walks with three legs, because each motor must operate closer to its performance limits to sustain higher payloads. The ReLIC framework addresses the sim-to-real gap through domain randomization during training, covering robot dynamics, terrain properties, and external disturbances. However, bridging this gap, particularly for dynamic three-legged locomotion, presented a challenge due to unmodeled motor parameter variations.

The solution was a motor calibration procedure. After initial simulation training, the team collected real-world data and optimized torque limits as functions of the joint state. The policy was then fine-tuned in simulation with these calibrated parameters, ensuring robust real-world performance.

Versatile Task Specification

ReLIC’s flexibility extends to how users can command the robot. It can interface with user input at the task level through three intuitive modalities:

- Direct Targets: Manually specify target trajectories for end effectors of selected limbs used for manipulation (e.g., via teleoperation).

- Contact Points: Describe tasks by defining key contact points and associated motions, from which target trajectories are generated via motion planning.

- Language Instructions: Leverage vision-language models (VLMs) to infer contact points and trajectories from natural language commands, offering more flexibility.

Quantitative Evaluation Framework and Performance Analysis

To explore practical applications of this research, ReLIC was evaluated on 12 real-world loco-manipulation tasks categorized into three coordination types:

Mobile Interlimb Coordination: Tasks requiring object manipulation during locomotion with both arm and leg

- Examples: Putting a yoga ball into a basket, lifting and moving a box

Stationary Interlimb Coordination: Complex manipulation with static torso balance. “Multi-contact” manipulation is required, as arm-only manipulation cannot complete such tasks.

- Examples: Placing a blanket in a deck box, opening and placing an item in a drawer or in a tool chest, opening a large and small trash bin with a foot pedal, using a tire pump

Foot-Assisted Manipulation: Tasks that do manipulation using both arm and leg.

- Examples: Placing items from a shelf into a basket, dragging a storage bin or laundry bag and placing items in it, moving objects out of the way while rolling a chair.

ReLIC consistently outperformed baseline methods (end-to-end RL and MPC), achieving an average success rate of 78.9% across all 12 tasks.

ReLIC demonstrates robust gait transitions which allow the robot to seamlessly switch between four-legged and three-legged walking without pausing or resetting its stance. In addition, it enables precise end-effector tracking. While walking with three support legs, the robot precisely tracks independent rhombus-shaped trajectories with both its arm and a lifted leg. But one of the most important results is ReLIC’s adaptability to diverse tasks: This framework allows the robot to perform tasks that include object picking, displacement, locomotion, and maintaining forceful contact, showing a level of task versatility.

Future Research Directions and Implications for Autonomous Systems

The ReLIC framework demonstrates its ability to handle multiple input modalities and achieve robust performance. This work opens possibilities for future research, including exploring learned controllers for more complex manipulation, integrating real-time feedback, and extending ReLIC to broader forms of interlimb coordination and robot morphologies. We hope ReLIC will inspire further research at the intersection of reinforcement learning and whole-body control, focusing on the role of adaptive interlimb coordination in advancing robotic capabilities.

ReLIC: Versatile Loco-Manipulation through Flexible Interlimb Coordination is authored by Xinghao Zhu, Yuxin Chen, Lingfeng Sun, Farzad Nirou, Simon Le Cleac’h, Jiuguang Wang, and Kuan Fang and is scheduled to be presented at the Conference on Robot Learning (CoRL) in September 2025. More details and videos are available here: https://relic-locoman.rai-inst.com/