In This Story

What an athlete can do when competing in mountain bike trials is astounding. Competitors are able to balance, bounce, and jump across obstacles, affordances, and terrains in ways that make it appear almost easy. This performance takes an impressive combination of biking skills and athleticism but also requires extraordinary human perception and intelligent planning. The courses these athletes are able to maneuver and navigate through are too tough for any standard vehicle to traverse.

While AI has mastered language and vision, directing robots to move swiftly and effortlessly through the world has not been solved. At the RAI Institute, one of our main research projects seeks to capture those athletic and intelligence skills and pair them with remarkable rough-terrain mobility, efficiency, and navigation – creating a robot that essentially thinks and moves like a world-class athlete. The challenge embraces every element of the robot’s construction, including the mechanical design, onboard power, control systems, software, perception, and intelligence.

Why Build a Bike Robot?

While dynamic legged robots have advantages on rough terrain with their ability to leap over and onto obstacles and use isolated footholds, wheeled robots are much more efficient and can cover flat ground faster. It follows that combining the jumping capabilities of a legged robot with the efficiency of a bicycle yields a robot designed for ultra mobility and performance.

The goal of the Ultra Mobility Vehicle (UMV) project at the RAI Institute is to first build a robot that combines these strengths of legs and wheels, and then use AI to unlock its physical capabilities. This research includes giving the robot cognitive intelligence and situational awareness, so it can reason effectively about its ability to move through the world. This world would include the type of terrain that trials cyclists negotiate, with large gaps and vertical steps up or down, rocks, hand rails, pedestals, downhill race courses, and the like.

This research helps us better understand robot control, state estimation, and perception. The UMV project team includes roboticists with expertise in mechanical engineering, electrical engineering, controls, machine learning, software, and perception

Bike + Brain = Jump

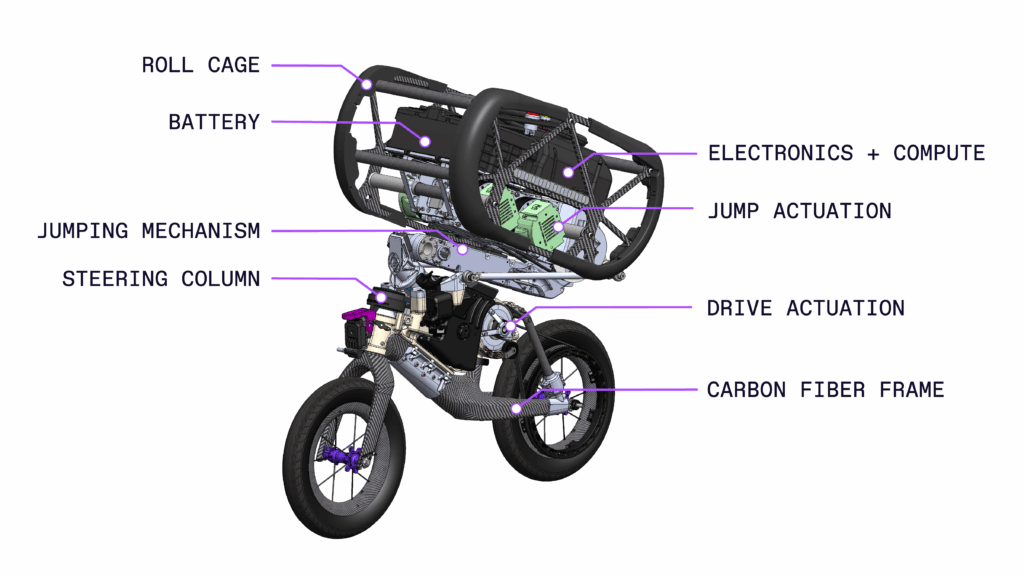

The basic structure of the UMV robot can be divided into upper and lower halves. The lower half is a lightweight carbon fiber bike frame, while the upper half is a mechanism that allows the robot to do jumps, flips, and hops. The robot has two motors in the lower half that control steering and the forward velocity of the bike. The upper half has four motors, used in pairs, for jumping. This part of the robot is a custom built actuation system that concentrates most of the robot’s mass in its upper-most body. We call this the “head”, which is where onboard power and compute are located. The computer inside the head runs the robot’s dynamic controls. These algorithms let the robot regulate important physical quantities such as acceleration, angular velocity, and jump height by sending commands to the motors.

The Z-shape of the robot allows for large changes in its size: when tucked, the robot measures about 80 cm tall, but it can extend to over 152 cm when jumping. To make jumping as effective as possible, the majority of the mass of the robot is above the jumping mechanism (i.e. sprung mass), while as little as possible is below it and close to the ground (i.e. unsprung mass). Once airborne it can “tuck” itself by lifting the lightweight lower bike portion up – similarly to how a person would pull their legs up to jump over an obstacle. Weighing about 23 kg, the robot is designed to be light enough to get off the ground with ease but robust enough to withstand rigorous testing. The actuation of the jumping mechanism has been strengthened to withstand aggressive launches, large landing impacts, and inevitable crashes during development.

A key challenge for robots like UMV is having onboard power that can deliver enough energy to the motors without excessive mass penalties. UMV uses custom-tuned batteries that are lightweight yet powerful – and most importantly, that meet established safety standards.

Movement, Measurement, and Navigation

In order to drive, balance, jump and flip, UMV has a collection of sensors to help it navigate its environment. These include:

- Joint encoders on all actuated degrees of freedom

- Inertial Measurement Units (IMUs) that measure body acceleration and the gravity vector; Also, by fusing multiple acceleration measurements across the robot, UMV can estimate when it’s in contact with the world, and the magnitude and direction of the ground reaction forces

- High-speed time of flight sensors that measure the robot’s height above the ground so that it can anticipate and prepare for landing

- LiDAR, which creates a point cloud of the terrain around the robot, allowing it to keep track of where it is in its local environment, and

- A forward-looking camera tracking identifiable visual fiducials, which can be used for localization and navigation in a laboratory environment

Jumps, Flips, and Tricks with Reinforcement Learning

UMV can repeatedly jump onto and off of a one meter table, do front flips, hold a sustained wheelie while driving around, and hop continuously on its rear wheel – much like a hopping legged robot. Machine learning (ML) paired with the robot’s unique mechanical design unlocks these dynamic abilities. The UMV team uses reinforcement learning (RL) to train the robot to achieve these behaviors.

RL is a type of AI-based training where a robot uses physics-based simulation to learn a desired behavior through trial and error. The training process rewards or penalizes an RL policy – as it directs the robot to interact with its simulated environment – based on whether it performs desired tasks well. In the case of UMV, those tasks are things like driving forward, turning, jumping, and maintaining balance. The UMV team uses NVIDIA’s Isaac Lab to train their RL policies, as the framework utilizes a powerful physics engine and the team’s own models to accelerate the training process significantly.

Through millions of simulations, a controller is trained by figuring out which sequence of actions produce the most rewards given the current state of the robot (e.g. how fast it is moving, its orientation relative to gravity, the position of the joints, how close the robot is to a goal location, what the terrain looks like in front of it). Good behavior gets reinforced; poor behavior gets penalized and eventually suppressed. Over time, the simulated robot learns how it should “behave” without being explicitly programmed by hand.

At this stage, the full policy deployment process for UMV is equal parts human and machine. New policies are trained in simulation and then deployed on the hardware, observed by the UMV project team and then tweaked in simulation – by adjusting the rewards – until the desired behavior is realized on the physical robot. The team collects and reviews extensive data from the robot to facilitate this process.

Of course, a simulation is basically required for training RL policies. Learning these intense dynamic behaviors on the actual robot wouldn’t be feasible, as the cost of running millions of trials, most of which are unsuccessful, would be prohibitive in terms of robot breakage and failure. This dependence on simulation makes the physical accuracy of our robot models very important. When a policy trained in simulation doesn’t perform well on the actual robot, there is typically a significant difference between the simulation’s prediction of physics and the real world. This is called the “sim-to-real gap.” The sim-to-real gap is typically addressed in two ways: (1) by improving the model of the actual robot, and (2) training the RL policies in simulation with randomized parameters.

For (2), some things may be randomized like the masses of the robot links, the torque constants of the motors, and ground friction. This helps the policies account for uncertainties and variations found in the real world. For (1), focused tests may be performed to get a better idea of the actual robot properties. A good example of this would be looking closely at the bouncing properties of the actual robot wheels when they impact the ground. Here we built simple drop-test stands for the wheels that let us look at peak impact forces, or how long impact events would take to settle to rest. Also, empirically verified models of the entire power system of the robot may be included, all the way from modeling the torque production of the motors, to a non-trivial battery model that predicts things like voltage sag. All of these additions to the simulation can significantly reduce the sim-to-real gap, and make policy training and deployment on the robot much more successful.

Next Steps in High-Performance Robotic Mobility

The successful execution of complex maneuvers is a good first step towards the project’s goal of creating an ultra mobile robot that can traverse extreme terrain. These skills – driving, jumping, wheelies, hopping, and flipping – are all part of building a foundation of behavior for more complex skill sequences that can be used to navigate both structured and unstructured environments autonomously.

Going forward, the UMV team will be working on enhancing the design of the robot for even better performance, and will be combining tricks and skills into complex action sequences. Long-term, the team will integrate flexible, high-performance perception that will give the system both situational and terrain awareness, including identifying obstacles and affordances in the world. This research will enable cognitive intelligence that allows UMV to understand its own physical capabilities (i.e. its athletic intelligence), understand the terrain in front of it, and how it can negotiate its way freely in the wild.